From Philadelphia to Luxembourg: RSE Team at Fall Conferences

18 February 2026

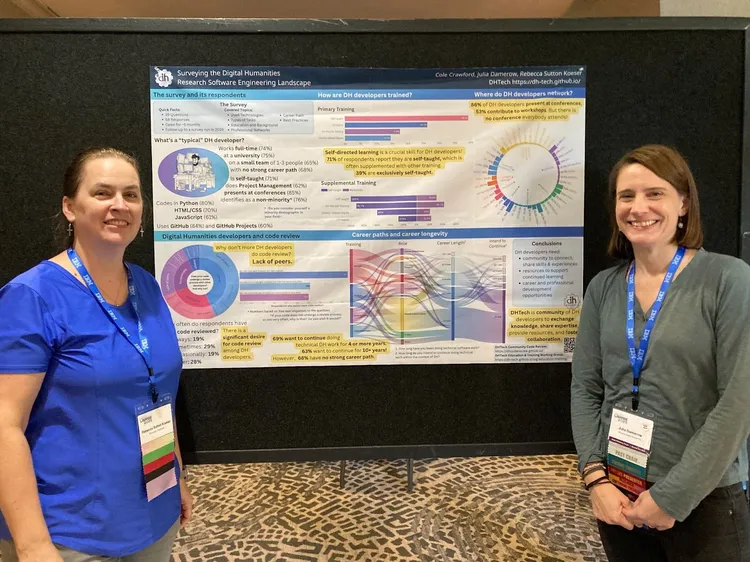

Mary Naydan and Jeri Wieringa present at US-RSE’25 in Philadelphia.

Last Fall, the CDH Research Software Engineering team traveled near and far to share their cutting-edge work and learn from others about new developments in the fields of research software engineering and digital humanities.

🔔 Philadelphia

From October 6–8, the RSE team participated in the third annual conference of the United States Research Software Engineer Association. Held in Philadelphia, US-RSE’25 brought together RSEs from across universities, laboratories, industry, and other institutions, as well as their managers and allies, to discuss “Code, Practices, and People.” CDH represented the small — yet mighty! — Humanities contingent in a field of mostly scientists and social scientists.

US-RSE'25

Lead RSE Rebecca Sutton Koeser presented a notebook on undate, a Python package for computing with uncertain and partially-unknown dates, such as those in Sylvia Beach’s lending library records and in the fragmentary Hebrew texts in the Princeton Geniza Project. Koeser also collaborated on two posters: “Community Code Review in the Digital Humanities,” which detailed the history, process, and future work of the DHTech Code Review Working Group; and “Surveying the Digital Humanities Research Software Engineering Landscape,” which reported on survey results about the backgrounds and career paths of DH developers.

Rebecca Koeser (left); Julia Damerow (right)

Postdoctoral Researcher Christine Roughan presented a paper, also co-written with Koeser, titled “Integrating ATR Software with University HPC Infrastructure: balancing diverse compute needs.” The paper and corresponding presentation described the methods and outcomes of Bringing HTR to the HPC: A Pilot to Customize eScriptorium for Princeton, a Research Partnership with the CDH under the umbrella of the Princeton Open HTR Initiative (funded by a 2024–25 Princeton Language + Intelligence Seed Grant). Conference attendees were fascinated to hear about how Koeser and Roughan implemented an instance of eScriptorium — the current leader in open-source handwritten text recognition software — on Princeton’s high-performance computing hardware, which enabled professors and students without advanced technical skills to train large text-recognition models customized to their documents’ needs.

Christine Roughan presents at US-RSE'25

Assistant Director Jeri Wieringa and Project Manager Mary Naydan presented on a panel about supporting and managing RSE projects. Their presentation “Creating Research Software with Humanities Faculty” highlighted the CDH’s chartering process, which helps transition humanities faculty from the individual, expansive mode of traditional humanities scholarship to the collaborative, modular mode of computational research. Their illustrative opening skit, which set the stage for the talk, drew lots of laughter and resonated with audience members. The rest of the panel was just as engaging, sharing lessons learned from many different types of organizations and fields, from the multi-institutional development of medical technologies, to a large laboratory focused on national security, to a lone RSE’s personal project management workflow at a research university (Naydan’s favorite presentation of the conference!).

Mary Naydan and Jeri Wieringa present at US-RSE’25 in Philadelphia.

The technical talks affirmed that the CDH RSE team is ahead of the curve on best practices in Python development, such as using uv for installing packages and choosing Marimo over Jupyter for notebooks. Koeser noted the field-wide shift in starting to think about notebooks as a form of publication, and CDH Research Software Engineer Hao Tan was inspired by Reed Maxwell’s keynote on creating groundwater simulations using physics-informed machine learning. Tan reflects, “Explainability is still a real issue, but rather than rejecting AI outright, we should learn to leverage its strengths and mitigate its weaknesses — through comparative evaluation, transparent step-by-step reasoning, and other methods we develop.”

Many of the conference’s presentations — from Maxwell’s keynote to the Birds of a Feather workshop “AI in Practice” — showed the field of research software engineering grappling with, adapting to, and incorporating AI. While our team entered the conference thinking our challenges were somewhat unique to the humanities, we were surprised to see RSEs from across disciplines encountering similar challenges around this topic: from defining research questions, to gathering sufficient data, to disabusing researchers about what AI can actually do.

🇱🇺 Luxembourg

Unsurprisingly, AI was also a popular topic at the 2025 Computational Humanities Research Conference, held at the Luxembourg Centre for Contemporary and Digital History (C²DH) at the University of Luxembourg from December 9–12, 2025. Many of the presentations focused on benchmarking various models for automatic transcription tasks, or using chatbots to scale up annotation data, from identifying “acts of God” in contemporary Christian fiction to assessing a popular song’s “narrativity.” Miguel Escobar Varela’s keynote, “‘A watch by Kran Kamu’: Exploratory fine tuning for cultural reliability,” discussed using supervised fine-tuning on large open-weight models to yield reliable results within highly specific cultural contexts, such as Southeast Asian historical newspapers — a common problem facing computational humanities researchers given the specialized nature of our data and the scarcity of it for fine-tuning.

Rebecca Koeser (left); Mary Naydan (right)

Rebecca Koeser and Mary Naydan presented a poster based on their short paper “Unstable Data and the Unusual Case of the Prosody Excerpt in the Digital Library” (co-authored with Meredith Martin). Using the HathiTrust materials contained in the Princeton Prosody Archive as a case study, Koeser and Naydan cautioned researchers that the page-level data provided by cultural heritage aggregators is not as stable as we might assume. This instability can lead to erroneous data, flawed conclusions, and difficulties building on previous scholarship.

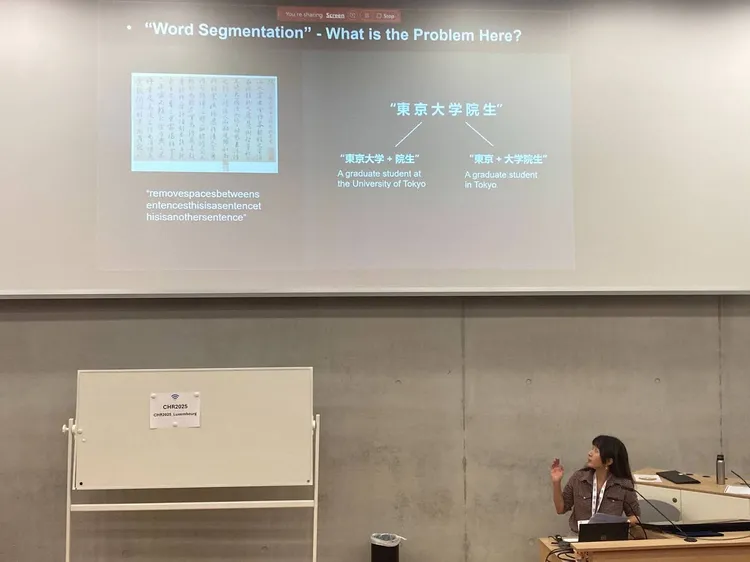

Hao Tan presents at 2025 CHR Conference in Luxembourg.

Hao Tan delivered a lightning talk, “When Larger LLMs Aren’t Enough: Word Segmentation in Historical Chinese Texts,” which used word segmentation in historical Chinese texts as a case study to highlight how large language models, while powerful, can quietly introduce risks when applied to humanities research. The talk sparked conversations with researchers working on East Asian materials across Europe, the US, and Singapore, especially around the tricky parts of historical text processing, with projects ranging from power relations in historical fiction to poetic imagery and stylistic change in epitaphs.

In addition to Tan’s lightning talk, Koeser and Naydan found two others particularly interesting: Katarina Mohar’s on “Speculative Reconstruction and the Ethics of the Fragment: Early Experiments with Generative AI in Art History,” and Antonina Martynenko, Artjoms Šeļa and Petr Plecháč’s on "Where Empires End: Tracing the Geography of a ‘Soaring Spirit’ in Poetry.” Mohar discussed the possibilities and limitations of using Generative AI to fill in gaps in medieval paintings, and provided practical recommendations for how to use it responsibly. Martynenko et al. examined the spatial imagination of European poets by mapping the distance and directionality of place mentions.

For Koeser, one of the most interesting presentations was “Cluster Ambiguity in Networks as Substantive Knowledge,” which describes a method for running a clustering algorithm multiple times to measure how often edge nodes connect nodes in the same community, allowing researchers to identify ambiguous data. Koeser is interested in the interpretive power and potential applications of this method, such as identifying ambiguous characters in novels. Another highlight was Taylor Arnold and Lauren Tilton’s presentation on “Sitcom Form and Function: Pacing and Production in a Collection of Thirty U.S. Series,” which examined how trends in visual and aural pacing changed over time using a combination of large-scale computational analysis and close reading: an example of truly multimodal research and scalable reading.

The RSE team was energized by these shifts in the field: leaning into ambiguity; using audio, video, and visual data rather than defaulting to text; and combining different scales of reading (close and distant) to draw more responsible conclusions. We are excited to carry what we learned into our work this year on multilingual machine translation and term clustering in music theoretical texts and using vision-based LLMs to aid historical document transcription and data extraction.

Photo by Hao Tan in Luxembourg.