Powering Up Grapheme-to-Phoneme Conversion for Old Chinese

8 September 2021

Alan Ding ’22, NLP+Humanities Fellow at the CDH, implemented a neural network to predict the pronunciation of Old Chinese characters.

Alan Ding is a senior pursuing a major in computer science and a certificate in statistics and machine learning. This summer, Alan served as the NLP+Humanities Summer Fellow at the CDH.

During the previous spring semester, Professor Christiane Fellbaum (Computer Science) introduced me to a wonderful opportunity to work as a summer fellow with the Center for Digital Humanities. Going in, I did not have much of an idea of what that would entail at all, let alone what languages, teams, and tools I would eventually interact with and leverage. The extent of my academic experience with anything related to language was a freshman seminar on the evolution of human language and a junior independent work seminar on natural language processing, both taught by Professor Fellbaum. However, trusting her judgment, I dived right into exploring some of the projects that were looking for assistance.

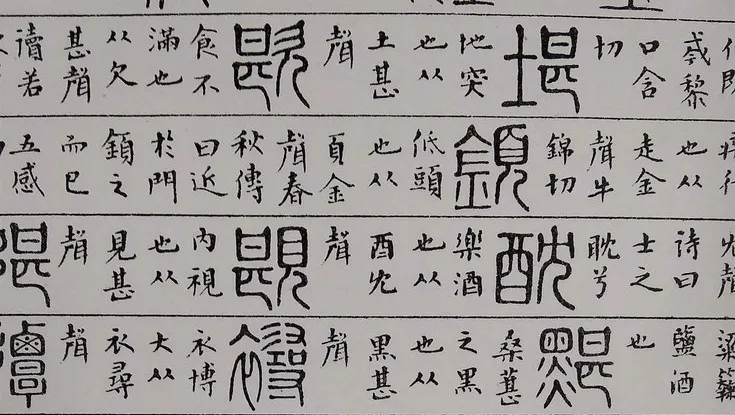

As someone who speaks (modern) Chinese and has interests in music and sound, I eventually found my way into the DIRECT (Digital Intertextual Resonances in Early Chinese Texts) team, whose members include CDH Digital Humanities Developer Nick Budak, and East Asian Studies graduate students Gian Duri Rominger and John O’Leary. The problem that this team originally set out to tackle was that of comparing two sets of text by phonological similarity, rather than by characters. Having the ability to do this is useful because in texts from the late Warring States period (about 350 BCE to 221 BCE), characters were used in a more phonetic way where characters pronounced similarly or identically could be used interchangeably (different from modern Chinese where substitutions like these are considered erroneous). As a result, comparing texts phonetically leads to a stronger ability to identify text reuse across separate texts than comparing them character-by-character.

Alan Ding ’22, NLP+Humanities Summer Fellow at the CDH

Naturally, to be able to identify similar-sounding passages between texts, we must start with reconstructing how the text sounds. This step is referred to as grapheme-to-phoneme conversion in linguistics circles. Prior to my work with DIRECT, the team has relied on Baxter-Sagart’s 2014 phonological reconstruction of Old Chinese. For most characters, simply looking up the unique pronunciation of a character suffices. However, some characters are polyphones (characters with multiple pronunciations), and others are not in the Baxter-Sagart reconstruction at all. The team did not have any way of dealing with these pitfalls, so my work served as a starting point for resolving them.

To solve the polyphone disambiguation problem (selecting the correct pronunciation for a polyphone), I implemented a neural network that would be trained on a dataset of polyphones each labeled with their correct pronunciation. The network architecture was proposed in a paper by Dai et al. [1] and shown to perform the best for modern Chinese polyphone disambiguation. The first layer of the network is a BERT layer, which transforms a sequence of characters into vectors that, in a sense, embed contextual and semantic information about the sequence. For this step, I was able to leverage GuwenBERT, a pre-trained Old Chinese BERT model. For characters without a pronunciation in Baxter-Sagart, I used GuwenBERT again to build a masked language model, whose purpose is to predict missing (masked) characters in a sequence. In particular, I leveraged this masked language model to predict alternative characters that do appear in Baxter-Sagart, hoping that the prediction uncovers a related character with a similar, known pronunciation.

Admittedly, the masked language model approach will predict an incorrect pronunciation more often than a correct one. However, having a prediction that has a low chance of being correct is already an improvement over having no prediction at all when it comes to identifying text reuse. More practically, I had to consider the amount of labeled data we would eventually have available to train whatever model I came up with. While generating labeled data remains a work in progress at this point, even when all is said and done, we would probably only end up with somewhere on the order of ten thousand labeled examples. This effectively prevents us from trying to train a network that solves the grapheme-to-phoneme problem in one fell swoop due to the complexity of the output space relative to the amount of data we have available. Tweaking the network architecture and parameters will likely be necessary moving forward.

Overall, I am extremely glad to have taken part in this opportunity with the CDH. While work on this will inevitably continue, I am happy to have jump-started an approach to this interesting and relatively less-studied area of research. I would especially like to thank Professor Christiane Fellbaum for making me aware of this opportunity and CDH Associate Director Natasha Ermolaev and the DIRECT team for coordinating this effort and empowering me to be able to contribute meaningfully!

[1] Dai et al., “Disambiguation of Chinese Polyphones in an End-to-End Framework with Semantic Features Extracted by Pre-trained BERT,” 2019.

Editor’s Note: This post is part of a series on undergraduate engagement at the Center for Digital Humanities. Check out last week’s post to learn more about the work of the Princeton Prosody Archive summer interns.