Scaling Multilingual Evaluation of LLMs to Many Languages

–

Speakers

- David Ifeoluwa Adelani

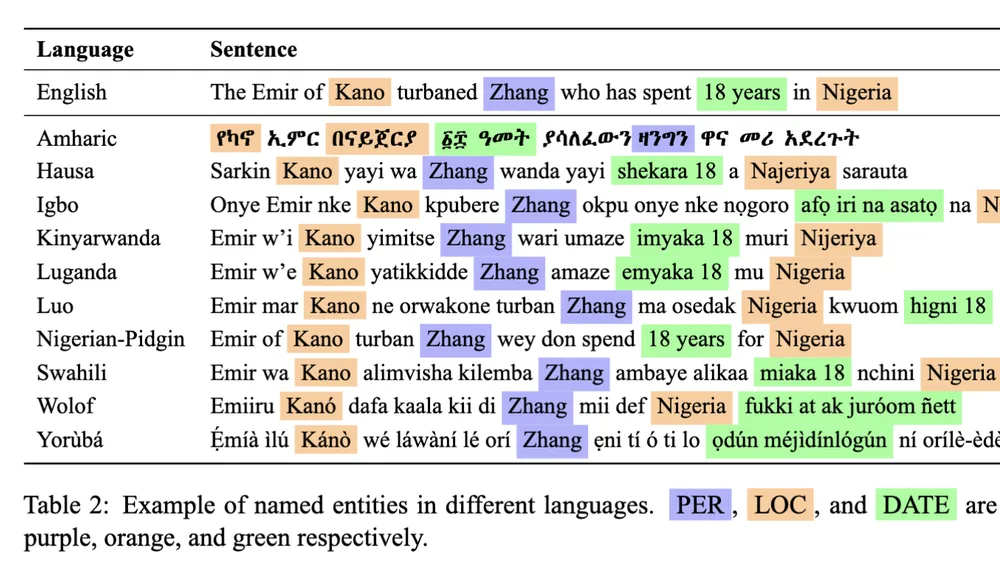

Despite the widespread adoption of Large Language Models (LLMs), their remarkable capabilities remain limited to a few high-resource languages. In this talk, David Ifeoluwa Adelani will describe different approaches to scaling evaluation to several languages. He will describe simple strategies for extending multilingual evaluations by repurposing existing English datasets to over 200 languages for both text (SIB-200) and speech modalities (Fleurs-SLU). He will also introduce IrokoBench–a human-translated benchmark dataset for 17 typologically diverse low-resource African languages covering three tasks: natural language inference, mathematical reasoning, and multi-choice knowledge-based question answering. The talk will conclude with highlights from recent projects that make some of these challenging datasets more multicultural for visual question answering and intent detection tasks, to encourage practical usage of LLMs within the low-resource communities.

Dr. David Adelani is an Assistant Professor at the McGill University School of Computer Science, a Core Academic Member at Mila - Quebec AI Institute, and a Canada CIFAR AI Chair. Before his appointment at McGill University, he was a postdoctoral and DeepMind fellow at University College London, United Kingdom. He received his Ph.D. in Computer Science from the Department of Language Science and Technology at Saarland University in Germany in 2023, where he was awarded a Dr. Eduard-Martin Outstanding Doctoral Prize. His research interests include multilingual natural language processing with a focus on low-resource languages, speech processing, privacy, and safety of large language models. With over 40 publications in leading NLP and Speech Processing venues like ACL, TACL, EMNLP, NAACL, COLING, and Interspeech, he has made significant contributions to NLP for low-resource languages. Notably, one of his publications received the Best Paper Award (Global Challenges) at COLING 2022 for developing AfroXLMR, a multilingual pre-trained language model for African languages. Other notable awards include an Area Chair Award at IJCNLP-AACL 2023 and an Outstanding Paper Award and Best Theme Paper Award at NAACL 2025.

Related events

African Languages in the Age of AI (AAA) Speaker Series

Bringing leading scholars to Princeton to discuss the opportunities and challenges for developing technologies that empower African languages

Towards AI Models That Can Visually Understand the World's Cultures

A New Agenda for African Languages x AI: Everything, Everywhere, All At Once

Related research group

Infrastructure for African Languages

Increasing representation of African languages in NLP, LLMs, and AI