Machine Learning & the Future of Philology: A Student Perspective

10 January 2023

Kurt Lemai ’25 participated in the recent symposium, presenting as part of a panel called “Reading an Unreadable Sermon: AI Text Recognition in the Early Colonial Period.”

The Center for Digital Humanities, along with the Manuscript, Rare Book and Archive Studies Initiative, and the Center for Statistics and Machine Learning, recently co-hosted a symposium called Machine Learning and the Future of Philology. This symposium brought together Princeton scholars whose work intersects with machine learning and projects relating to manuscripts, rare books, coins, and other texts in a variety of languages, from Syriac, to Chinese, to English.

Kurt Lemai (Comparative Literature, ’25) is an undergraduate student and a co-leader of the Student Friends of Princeton University Library. He participated in the symposium, presenting as part of a panel called “Reading an Unreadable Sermon: AI Text Recognition in the Early Colonial Period,” alongside Gabriel Swift (Special Collections, Princeton University Library), and Seth Perry (Religion, Princeton University).

I interviewed Kurt about his experiences participating in the symposium, and what he learned from researching and presenting about AI text recognition in seventeenth-century documents.

What were the driving questions of the panel, “Reading an Unreadable Sermon: AI Text Recognition in the Early Colonial Period”?

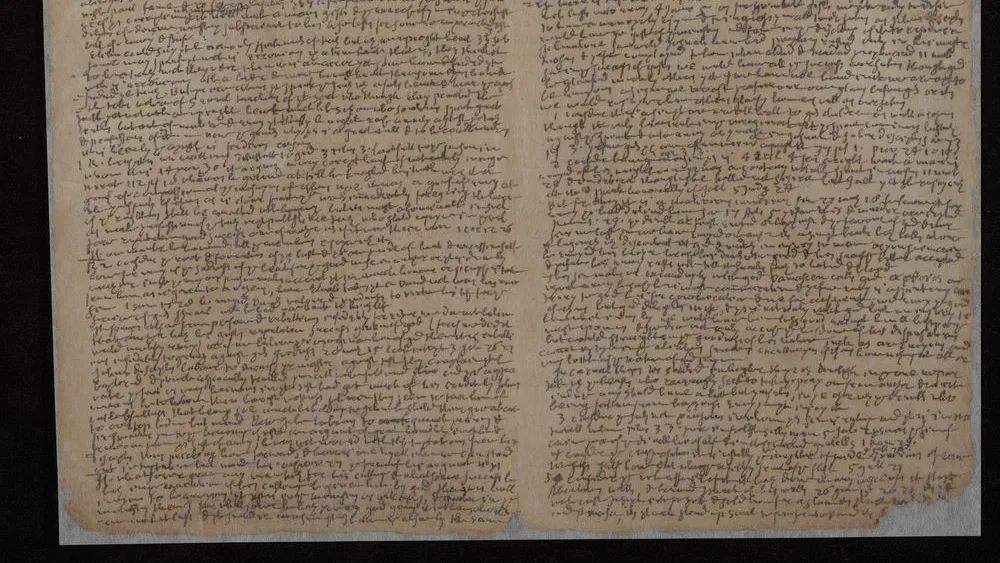

My project was interested in seeing if Transkribus, a handwritten text recognition software, could help us read a broadside by Reverend Samuel Phillips, who was a pastor in Rowley, Massachusetts, in the mid- to late-17th century. The document has particularly bad handwriting, making it nearly indecipherable to researchers. We were also interested in the learning curve required to understand how to use Transkribus, since we hope that it would allow more students to work on primary documents who do not have formal experience in the field. Cursive is not as commonly taught today, so Transkribus may help with developing the skills required to understand handwritten documents, whether that be with a direct transcription or by showcasing examples of written words in context.

What were the main findings of your panel?

For our project, we were able to produce a model trained from a set of transcriptions of Phillips’s church records produced by Lori Stokes and Helen Gelinas. This manuscript had better legibility than our own document, so with it as the foundation, our model was able to gain a roughly 90% accuracy rate in detecting characters, tested on a set of pages set aside from the church records. On our own broadside, Transkribus was able to create a basic, but flawed transcription, which I then edited to extend abbreviations, which I verified by using Transkribus to search through the church records. Some examples of the abbreviations I found were “Xst” for Christ, “yt” for that, and “pp” for people. In a separate document I would highlight these words and replace them with the full word.

With the assistance of Professor Perry and librarians, we could identify foreign language components in Latin as well as biblical references, which helped to improve our understanding of the broadside. It is through this process that we learned that Rev. Phillips was writing preacher notes on a talk about envy, honing in on pastors who preached out of personal desires for fame and materiality rather than for the love of God.

How did you become involved in this project?

During the summer, I began to work with Gabriel Swift, the librarian for the American West. He would give me projects that I would try to work through—generally involving some process of automation through coding, such as compiling catalog records. I was interested in learning Python and had a small amount of experience in computer science, so this put me in a good position to work on these types of projects. Gabriel had looked at Reverend Phillips’s document before with Professor Perry and they were interested to try and get a student to figure out a way to generate a transcription. When they discovered Transkribus, this became one of my projects. I was thrilled that Transkribus allowed me to enter the field of machine learning, which had always seemed so out of reach to me. Being able to learn about the way that the program works and eventually produce a tangible product was deeply fulfilling.

What was your experience like participating on the panel?

This was my first time joining an academic meeting, so I honestly felt slightly overwhelmed with the constant stream of new vocabulary to google, but it was thrilling to get a glimpse at the highest level of academic work. I was at the edge of my seat hearing about their work and being stunned by every revelation of techniques, ideas, and approaches. Seeing some of the top researchers in the field, talking about the work that they have done over the years, motivated me and instilled a stronger desire to continue learning, wanting to understand more of their talk.

What did you learn from participating in this research and from the symposium?

I would say that the most significant thing I learned from this project was simply the overwhelming potential and power that exist in the intersection between computer science and the humanities. It may be an obvious thing to those already in the digital humanities, but for me, seeing truly how deep the hole can go, is miraculous to me. To identify a document based on a long fragment of paper with maybe 2–3 words per line, using the help of corpus search tools, is incredible.

The symposium as a whole focused on examining how new tools in the field of AI, corpus management, and others are helping drive research in philology. Some such questions are whether AI can be trained to read primary documents in Syriac, or to parse the information from the Geniza fragments, or whether can they be trained to detect rhyme in classical Chinese poetry? More broadly speaking, the symposium looked at how interesting research questions can guide the development and usage of computer science to glean new knowledge from large amounts of data. In Professor Rustow’s presentation [on material related to the Princeton Geniza Project], for example, she talked about how large amounts of material can be difficult to parse, but with the brute force of computation, it becomes possible to save humans time and allow them to identify and then focus on the most significant documents. A common theme in the talks was that these programs are still fundamentally tools, which need the direction and questions of researchers to produce quality results. It was with this alliance between man and machine that I hope I can continue studying in the future.

Thank you, Kurt! Machine Learning and the Future of Philology is intended to be a two-part series; the second symposium will take place in 2023–2024.

Carousel Image: from A Council Held at Boston March 8, 1679, 80 (Princeton University Library Special Collections)