Mistakes to avoid when using Twitter data for the first time

19 March 2021

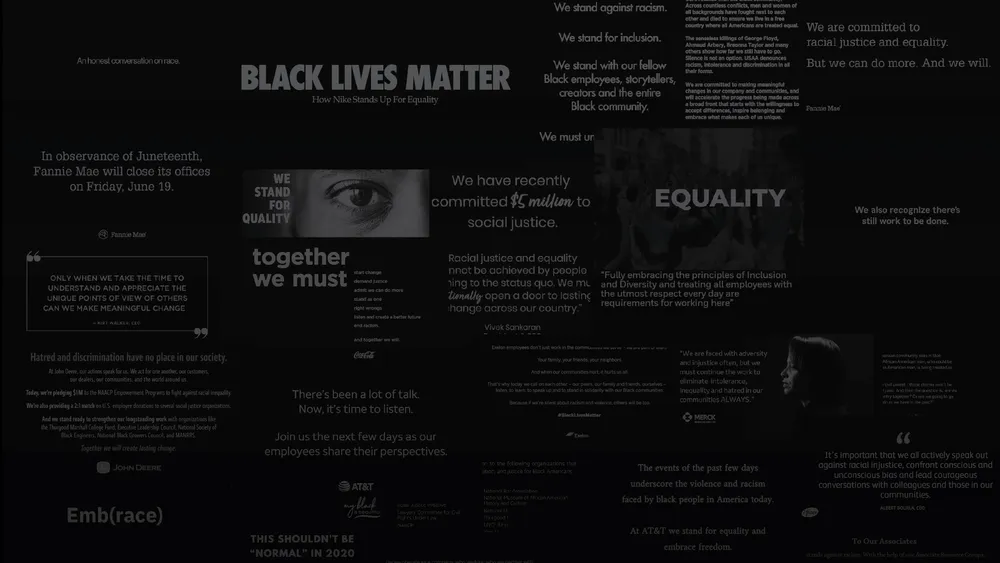

I just finished putting a lot of time into collecting, cleaning, and analyzing Fortune 100 tweets from the 2020 Black Lives Matter protests. After a few thousand tweets, I hit plenty of hurdles and corner cases. Below I’ve listed some mistakes that I’ve encountered while working with the Twitter API.

I just finished putting a lot of time into collecting, cleaning, and analyzing Fortune 100 tweets from the 2020 Black Lives Matter protests. After a few thousand tweets, I hit plenty of hurdles and corner cases. Below I’ve listed some mistakes that I’ve encountered while working with the Twitter API.

Many of these trip-ups are addressed in Twitter’s documentation, so while it’s okay to dip your toes into web scraping and bot-making without reading everything, make sure to flip through the field descriptions and limitations of the API before doing anything important! For those just getting started, here is a video tutorial and here is a quick blog post —though these are by no means the only tutorials out there.

Make sure to get the full text data.

This is a pretty standard slip-up: tweets used to be only 140 characters, but now it’s 280 characters. Make sure that, whether making a direct API query or using a wrapper, set "extended" equal to true (by default, this value is set to false). The parameter will tell Twitter that you want the full 280 characters.

When applying any kind of validator to your data set, I recommend including something along the lines of:

for tweet in dataset:

assert not tweet['truncated']This will help ensure that the full tweets were captured on each scrape.

Standardize carriage returns, because Twitter doesn’t.

Both "\r\n" and "\n" can appear in your tweets. When creating a CSV from the JSON response, however, this can cause problems. A CSV with two separate line endings will confuse pandas and goes against agreed on standards. Perhaps the easiest way to handle this is using python’s repr function to explicitly include special characters in the string. This would force everything into one line and prevent confusion:

>>> lengthy_string = "Look at these cat pictures\n#cats"

>>> print(lengthy_string)

Look at these cat pictures

#cats

>>> print(repr(lengthy_string))

'Look at these cat pictures\\n#cats'However this method will add steps later if you’re looking to analyze your text data. Another reasonable fix is to simply remove "\r", and replace it with a space by using str.replace('\r', ' '), but this method depends on your research question.

Use the correct field to determine retweets

The most reliable way of determining whether a tweet is a retweet is seeing whether the "retweeted_status" field or "quoted_status" field exists.

is_retweet = hasattr(tweet, 'retweeted_status')

is_quoted = hasattr(tweet, 'quoted_status')While parsing through the tweet JSON, these fields aren’t obvious. The "retweeted" field is a red herring. It indicates whether the authenticating user (the person who registered the API token) had made the retweet. And looking for "RT @" at the beginning of the text is unreliable.

Remember that these two fields are nullable, meaning that since the original tweet can be deleted, the value associated with the "retweeted_status" field can be null. Therefore, you need to look for the existence of these fields. Don’t make some kind of truthy conditional for them. Something like…

if tweet.get('retweeted_status'):

print('is_retweet')…would incorrectly characterize a retweet as a regular tweet if the original was deleted.

Determining whether a tweet is a reply is complicated.

It turns out that there is not a single, uniform way to determine whether a tweet is a reply. Some online commenters recommend looking for the field "in_reply_to_status_id" in the tweet JSON. But some normal tweets have this field, just set to null. You can see if the field is null, but that too is unreliable. "in_reply_to_status_id" becomes null if the original tweet is deleted, and "in_reply_to_user_id" becomes null if a user deletes their account. And of course not every tweet starting with "@" is a reply; a tweet is considered a reply only if it’s in response to a particular status.

In short, there isn't a reliable way to do this. Thankfully, this problem is addressed in v2 of Twitter's API. For more information, read this discussion on the Twitter developers community forum. You can also filter by replies in your API request.

Don’t store Tweet IDs as integers.

Tweet IDs are very large numbers, and some software, through lack of space in memory, will round integer IDs without you knowing. Twitter recommends that you work exclusively with the "id_str" field. If your program views the ID as a string, it won’t face the same memory challenges that it faces if it’s stored as an integer. Most programs can handle the current size of Twitter IDs as integers, so you’re welcome to work with the integers at your own risk, but consider double checking that the "id_str" field is equal to the "id" field during your validation process.

Other tips that made my life easier

- Microsoft Edge loaded tweets faster than Firefox or Chrome. This can be useful if you need to scroll through many tweets.

- Remember that not all the language of a tweet is included in the

"full_text"field. If you’re doing textual analysis, you may have to consider the text in images or videos as well if it’s important to your research. If you need to download the media associated with a tweet, here’s a link to a snippet. - Make sure you’re familiar with the limitations of the Twitter API. You can only get the latest 100 retweets, you can only view the latest 3,200 tweets on a profile, you can only get the latest 75k followers of a given user every 15 minutes. Programmers misunderstanding these limitations have led to huge software failures, so keep them in mind when creating something that’s supposed to be reliable.